Google Ranking Signals Cheat Sheet

According to multiple studies, only 9 signals (not 200) impact rankings the most today. Jump to this ultimate SEO cheat sheet – a step-by-step, straightforward recipe to top Google rankings. Free PDF inside!

Website developers are not always quite as savvy as SEO practitioners when it comes to creating websites that perform well in the SEO arena. Even though development requires a set of technical skills, different technical skills are required for SEO. By following a standard checklist, it can be possible to follow Google’s Webmaster guidelines while also getting ahead in the all-important competitive space in SEO. This checklist looks at things like site speed optimization, structured data, HTML improvements, cross-platform compatibility, and other development tasks from a developer’s perspective in the context of SEO.

By examining these items in further detail, we can identify potential roadblocks and bottlenecks most commonly associated with the website development life cycle. This checklist identifies items routinely covered in Google’s webmaster tools, and then some, to ensure that you have all bases covered for a successful website optimization, every time. In addition, this checklist will also cover lesser known but crucial items that are important to tackle during development before they go live on the site.

Is My Google Webmaster Tools Properly Installed? Is Google Analytics (Or Stats Tool of Choice) Installed?

Most websites use Google webmaster tools and Google Analytics as their statistics tracking program of choice. Of course, there are other options. But tracking programs are worthless if they are not configured correctly.

Checklist Item #1: Is My Traffic Tracking Program Installed Correctly?

If your tracking program is not installed properly, it is possible that it will spit back erroneous, incorrect data, or worse, duplicate data showing an increase when there may not even be an increase. At its simplest, make sure that the tracking program tags (if needed) are all only installed once on the page. Multiple installations and erroneous installations can become major headaches later. Google recommends using Google Tag Manager for more complex analytics installations.

Look Into This: Mobile First Development

A mobile-first index coming from Google has long been rumored, and finally, it’s no longer a rumor. In web development, considering a mobile-first approach is always a good idea.

Checklist Item #2: Is Your Site Mobile-Friendly?

Mobile-friendly can mean two different things in the development world.

- Does the site adhere to standard development techniques using a responsive CSS stylesheet with media queries? Or…

- Does the site utilize a separate mobile subdomain (m.domain.com)? While there is nothing inherently wrong with using a mobile sub-domain, it can make SEO more difficult.

Any time you utilize a sub-domain, you are telling Google, in essence, that it is a second physical web property. Compared to using a sub-folder, which is typically an extension of a site, the sub-domain will be considered a separate web property and can be harder to manage when it comes to certain SEO tasks like link acquisition.

Checklist Item #3: Mobile URL Structures Leading to Duplicate Content

It is this author’s opinion that, with a site using the m.domain.com structure, your site should be developed in such a way that URL structures do not result in being identified as duplicate content.

Duplicate content can arise from using multiple URLs to show the exact same content. When creating a mobile site using a mobile sub-domain, the general best practice is to make sure a rel=canonical tag is used to show the desktop site as the original source of content. This can help prevent duplicate content issues. Please note that Google’s developer guidelines also recommend doing this.

In addition, make sure that URL structures during development don’t get out of hand, especially when setting up a site for https:// (secure hypertext transfer protocol). It is typical development protocol to obtain secure certificates for sites that are using https://, but be careful. If you do not purchase the right secure certificate, you can create crawling problems due to soft 404s. It’s like this: If a page of content is on https://www and https://, this just leads to crawl problems and duplicate content because Google easily gets confused by multiple URLs showing the same content.

To avoid this issue, always purchasing the wild card version of the secure certificate will help make sure that duplicate URLs are not created during development. Otherwise, you will manually have to perform 301 redirects, which can become a nightmare when you’re dealing with a larger site.

Because of the inherent problems developing a site with secure, mobile, and other types of URLs as noted above, it is usually the best idea to get a wildcard secure certificate and develop a site from the ground up using one single style sheet to control your layout across devices. This way, you preserve the content on mobile that Google likes to see, you have the opportunity to reduce calls to the server (greater site speed and less bottlenecks as a result), and you reduce URL structure issues by attacking all of the problem areas at once.

Look Into This: Schema.org Structured Data

Schema.org microdata is becoming more important on websites, and creating sites by coding Schema.org microdata properly is crucial.

Checklist Item #4: Is My Structured Data Coded Properly?

Creating a process where you manually check Schema.org structured data by using Google’s Schema.org validation tool is a good process to have that will help you become a more successful coder. Without this process, you’re flying blind and will be at the mercy of Google’s confusion if you don’t have this data correctly executed. While most common errors are uncovered by using Google’s Structured Data Testing Tool, there are other minor errors that can occur that are not. These other errors are crucial to get right, so let’s examine what can happen if you don’t.

Take the following example: You’re creating a website for restaurants and you want to include the website’s restaurant name and location information. After a first pass coding the Schema.org structured data, you realize that you see some strange punctuation and contextual errors within the Schema.org data. For example:

<div itemscope itemtype=”brand”><span name=”organization”>Copyright Company Name, All Rights Reserved</span></div>

<div itemscope itemtype=”brand”> Copyright <span name=”organization”>Company Name </span>, All Rights Reserved</div>

Notice in the first example that the copyright declaration is included within all the tags. In the second example, the Company Name is nicely placed between the opening and closing span tags. This second example is what we want.

This is one example of contextual coding errors that can occur when coding Schema.org microdata. These types of errors will not show up in any automatic tool like Google’s webmaster tools, and thus it is necessary to perform a manual check to look for these errors. If the first coding example is used, Google will show the rich snippet as “Copyright Company Name, All Rights Reserved” instead of showing the rich snippet as “Company Name” which is what should happen.

By implementing a thorough checking process before the site ever goes live, as a developer, you can take charge and make sure these types of issues don’t crop up later during the SEO process.

Look Into This: Check Your Robots.txt File

Sometimes, during development, it may be necessary to completely block access to the domain before it goes live. Usually, this is done by using the following command in a robots.txt file on the server:

Disallow: /

However, I have run into quite a few instances where this set of code has been completely forgotten. The client calls me, wondering why their site is not performing. Always make sure there isn’t a disallow directive in robots.txt. This will prevent crawling by the search engines and can be a major damper on a website’s performance.

Now, there is a big difference between “Disallow:” and “Disallow: /”. For non-SEO practitioners, these directives can seem confusing.

- Disallow: (without the forward slash) means that all search engine spiders and user agents can crawl the site without issue from the site root down.

- Disallow: / (with the forward slash) means that everything from the site root down will be fully blocked from search engine indexing access.

Checklist Item #5: Does My Robots.Txt File Have a “Disallow From The Root Directory” Directive?

As you may imagine, the proper removal of this directive is a good checking step to ensure that search engines can access your site properly.

Look Into This: Staging Site Domain Check

As most developers are aware, during the development process a staging site is created for the purpose of testing new code, previous versions of the site, and correcting other issues before it goes live. A common error during the development process is missing a few instances of a staging site domain when preparing the site for its first launch. This check can help eliminate errors like images not loading properly once the site is switched live, 404 errors due to pages referencing the staging subdomain instead of the live site domain and other issues.

Checklist Item #6: Does My Site Contain Instances of Staging Site Sub-domains?

It is possible, through the use of efficient find and replace techniques, to quickly search for and replace any instances of the staging site sub-domain. For example, in your favorite development program, using Ctrl + H on a Windows machine (or cmd + H on a Mac), you can easily use find and replace for finding all instances of the occurrence of the staging site sub-domain (for the sake of our example, let’s use “stage. domain.com”). When the site is switched live, the domain.com will be reflected in full instead of stage.domain.com. But, if a checking process is not implemented, the site could go live with stage.domain.com URLs being referenced everywhere.

Please note: This checking process will likely not be needed if a relative URL is used during development, as opposed to absolute URLs. For those who are not familiar with this, relative URLs drop everything before the first sub-folder in a URL structure, leaving it looking like this: “folder 1/folder 2/page.html”. An absolute URL includes domain.com at the beginning of the URL structure, leaving it looking like this: “domain.com/folder 1/folder 2/page.html”. It is in the case of absolute URL structures where this check is necessary. Depending on your site’s configuration, you may not even need to implement this step.

Look Into This: HTML Errors

Common HTML errors can often lead to poor rendering of the site on various platforms, which in turn can cause issues with user experience and site speed. This can then cause issues with site performance from a rankings perspective. While this is NOT a direct rankings impact, it can be an indirect rankings impact, kind of like when W3C valid code is not used. W3C validity is not required for ranking sites, but it can lead to an indirect increase in rankings because W3C validity can help reduce site speed through better-optimized layouts and coding techniques. Better coding techniques can also help Google understand your site easier.

It is imperative to note here that utilizing a checklist for common errors will not get rid of everything, and may only cause a slight blip in performance increase if other major issues already exist on a site. But, they are worth fixing when you have all of your SEO ducks in a row.

It is also worth noting here that there are two schools of thought when considering HTML issues. One school of thought believes that correct coding is not essential, that the site will perform and do well in Google anyway. Another school of thought believes that correct coding can lead to increased performance including increased performance in the search results. Who is right? It is this author’s opinion that the correct answer is to always validate your coding.

As a born-and-raised developer turned SEO, it has always been my own best practice to perform complete W3C checking and validation on my sites WHEN I have complete and full control over them. Is it always possible to achieve that kind of coding nirvana? I think so. There are situations where this kind of coding ideal is not possible, so it is necessary to judge the situation for yourself, as not everything discussed here will always apply.

Checklist Item #7: Does My Site Contain Major Instances of Coding Errors?

One common coding error is the utilization of polyglot documents or documents that were coded with one document type, but implemented on a new platform with another document type. When I was in law firm SEO, I ran into this often when developers were attempting to cut corners. The general appearance of coding documents in this manner resulted in many coding errors being thrown by the W3C validator, sometimes thousands.

To the inexperienced, it seems as if you would need to change every HTML tag or other instance of coding in order to comply with W3C standards. Not at all. It ended up being the case of pure ‘copy and paste’ of the code. In most instances where budgets, project scoping, and other issues interfered with development times, it could be impossible to implement the right fix, which is to make sure all coding adheres to the chosen document type.

The simple solution, especially on large sites where large budgets, inter-departmental cooperation, and third parties are involved, would be to simply change the DOCTYPE line to be the right DOCTYPE the document was coded in.

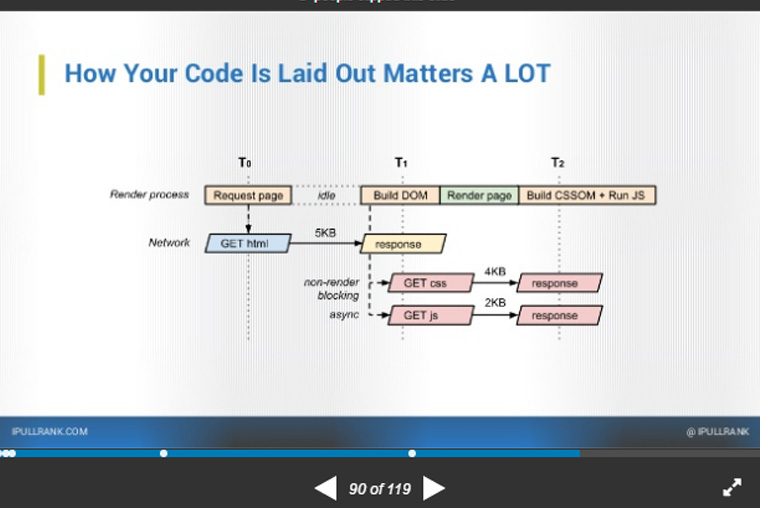

Checklist Item #8: Does My Site Contain an Efficient Code Layout?

How code is laid out is extremely important, as explained by Michael King, Founder and Managing Director of iPullRank. Code layout can impact rendering times, overall site speed, and eventually the final performance of the site. From a server perspective, this is a crucial step to get right.

From the client-side perspective, this can also impact load times. When considering client-side coding, let’s time travel a bit. Back in the olden days of 1998, it was not uncommon to find code layouts with 2400 rows and 2400 columns in table non-CSS designs. Here, in 2017, it is also not uncommon to find bloated code layouts with 50 rows and 50 columns created entirely in nested DIVs, where the amount of rows and columns is inappropriate and contributes nothing to the overall layout.

Of course, I am exaggerating on the number. By putting a little thought into a layout, it is possible to compress some over-bloated layouts down to three DIVs (and maybe one or two nested DIVs): header, content, and footer. This applies to the client-side. On the back-end from the server side perspective, code layout of everything from PHP to JavaScript, CSS, and HTML, efficient development practice should take into account the minification of code, as well as better coding layouts.

One more issue: Meta tags should also be coded according to DOCTYPE. If the W3C validator throws errors due to meta tags, it is most likely being caused by self-closing meta tags being used in a DOCTYPE that does not provide for them. For example: (<meta name=“description” content=“” />) as opposed to <meta name=“description” content=“”>). The former is an instance of a self-closing meta tag, which can be required in XHTML documents. In standard HTML 5, it is not required to use it. Make sure you know the rules and how to implement them when using differing DOCTYPEs. Otherwise, these errors can cause issues.

Look Into This: Image Issues

Images are used in web design to convey mood, tell a story, and make things look cool. However, they can impede the user experience when they become too large. Now, more than ever, with Google’s mobile index looming, it is important to consider image size when it comes to enhancing site speed on mobile. While it has always been a development best practice, not everyone in web development implements best-practice techniques.

Ok, so let’s time travel again. In the olden days of 1998, it was commonplace to make sure that pages never exceeded 35 kB in size. According to HTTP Archive, most web pages now don’t exceed 14 kB in size for the HTML files (not including images). Most web pages now don’t exceed 648 kB as of 12/15/2016, which includes all potential elements (like scripts, fonts, video, images, etc.). What is the best page size to target when developing a site? The smallest size possible given what you are developing for. Any improvement towards faster site speed can help improve your site performance overall.

Checklist Item #9: Are Images Causing Unnecessary Bottlenecks?

It is this author’s opinion that it should be standard for developers to optimize images. In my experience, I’ve found that many developers are not utilizing image compression techniques. For example, it is possible to take an image, compress it down to manageable file size in Photoshop while maintaining quality and physical dimension size. This is usually done on photos by adjusting the JPG settings when exporting an image for the web.

Different Development Platforms: Are Images Unnecessarily Large When They Don’t Have to Be?

This not only takes into account physical download size but also the image pixel dimensions in general (these are not always mutually exclusive, depending on the platform you use). I have found WordPress developers to be a major offender of this particular checklist item. Images will be uploaded to WordPress and resized without much regard for the physical download size of the image.

This can result in a 20 x 20 image on WordPress being 2 MB in size if you are not careful. To make sure this does not happen, always open the image in Photoshop (or whatever image compression program you use) to resize it and compress it properly using lossless compression. Doing this and carefully keeping watch on the final image download size within WordPress will help make sure that unintentional file size increases will not negatively impact your site speed.

Look Into This: Search Plug-Ins, Other Plug-Ins

Whenever you develop a website, especially on platforms like WordPress, issues can crop up even when the developer is not at fault. Search plug-ins can cause especially harmful, irreparable damage to a website’s reputation if they are not caught early. One such instance can be if a search plug-in creates multiple pages for every single search result typed into the search plug-in. When this happens, not only do you get many blank pages without content, you can also run up the bandwidth bill from your website hosting provider.

Checklist Item #10: Are Any Rogue Plug-Ins Causing Major Issues With SEO?

Other rogue plug-ins can cause major SEO issues especially when they insert things like links into the footers of your pages automatically (site-wide footer links for linking’s sake is against Google’s Webmaster Guidelines: “Widely distributed links in the footers or templates of various sites”), and other issues.

Look Into This: Site Speed and Server Configurations

Utilizing much of the advice in this checklist will help get you to a good spot when it comes to the best options for site speed. There are, however, other issues to consider when server configurations and site speed come into play. Some bottlenecks can be caused by server issues, but it will be up to you to identify and figure out what bottlenecks are being caused by your server. Some common bottlenecks include the following, which are all fairly easy fixes.

First, begin by checking your site speed using Google’s Page Speed Testing tool. They have moved most of their page speed and other insights into their Mobile-Friendly Test Tool. In addition, I recommend also using the site test tool webpagetest.org.

Checklist Item #11: Is Your Server Using GZip Compression?

GZip compression on a server lets the server compress files in such a way that results in faster transfers over a network. It is generally standard on most servers built today, but can sometimes be an issue on some servers. If you don’t know, it is likely already enabled on your host. You can check this by running a test on webpagetest.org.

Checklist Item #12: Server Time to First Byte Loaded

Is the first byte to load taking longer than the rest of your website to load? If so, this could be a bottleneck that can help you squeeze out every last bit of performance during the website development stage. This first-byte time measures the “time from when the user started navigating to the page until the first bit of the server response arrived.” When there’s trouble in paradise on this metric, this can usually mean a server configuration issue that can usually be fixed by your server technician.

If following all the optimization items discussed in this checklist doesn’t work and you have found yourself having a severely long time to first-byte loaded issue, it’s a good idea to check with your server technician. This should help resolve any outstanding issues presented by this problem.

Final Parting Thoughts

Developing websites can be a fun, exciting endeavor. But they can also be a challenging affair wrought with issues that can plague a website’s SEO performance for years to come if you are not careful. Performing an in-depth website audit on an existing site could be the answer you need if you want to solve your website problems.

Otherwise, when developing your website, following the steps in this guide will help you get your site to a great place from an SEO perspective. In the coming year, a mobile-first development approach with an emphasis on site speed will be an essential development step towards winning an edge in the competitive Google SEO landscape.

[“source-smallbiztrends”]